A Custom Framework for AI Governance in Education

AI is rapidly transforming education, yet robust governance lags; most institutions lack comprehensive policies, leaving them vulnerable.

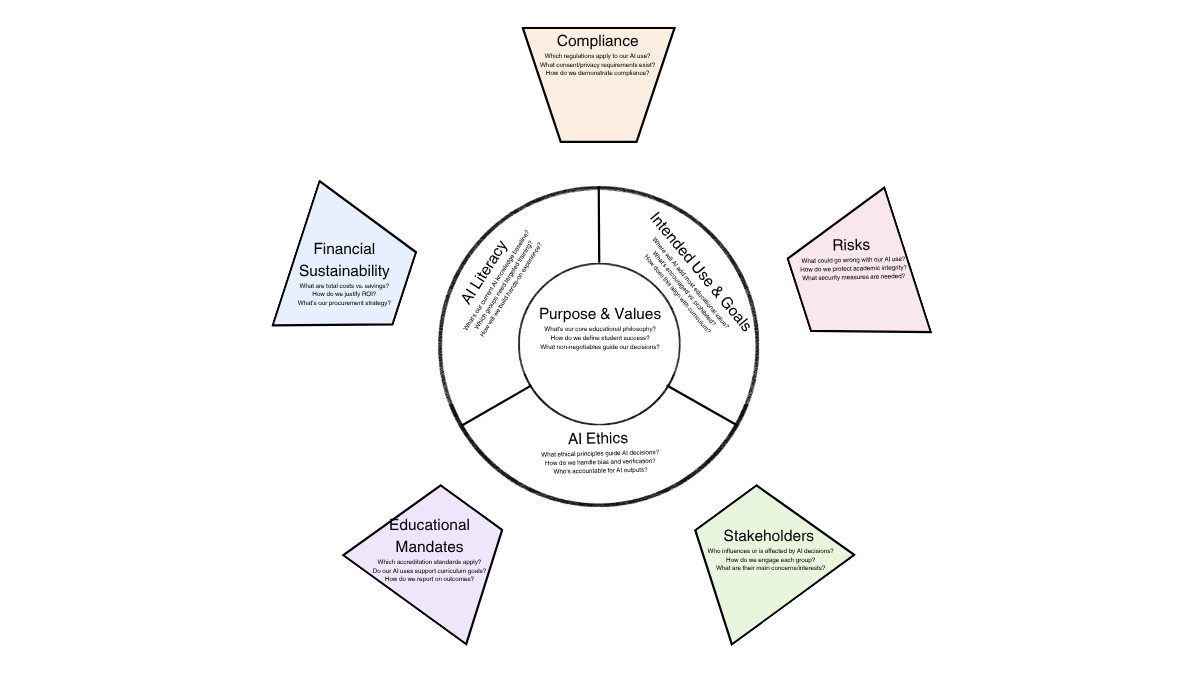

Drawing on extensive research into global standards and real-world case studies from universities and schools, I’ve synthesized a custom AI governance framework for education.

This AI governance framework is rooted in the governance experiences and case studies from Universities and Schools from around the world, aligned with global standards.

This isn’t a one-size-fits-all checklist. It’s a flexible, layered framework that helps you align your values, manage risk, and actually make AI useful, without sacrificing trust or academic integrity.

From my experience as a consultant I couldn’t leave it there, so I turned the framework into a canvas that you can draw and use for brainstorming the different elements of the AI governance strategy for your school or university.

Internal Drivers: Where Your AI Strategy Begins

I like starting from the inside out. If your AI policy isn’t built around your school’s mission, values, and real needs, it’s not going to work long-term.

It’s not just about writing rules. It’s about building a system that reflects who you are as a school or institution. Without that connection, your AI plan becomes a patchwork of vague policies disconnected from real classrooms, teachers, and student needs.

1. Values and Purpose

Your core beliefs should drive everything, from what tools you choose to how you handle academic integrity. In every project I’ve led, we start by asking:

- What’s our educational philosophy?

- What are our values as a school?

This isn’t just a theoretical exercise. These questions lay the foundation for how your institution defines trust, equity, and purpose in a digital age. When values aren’t front and centre, AI adoption tends to drift toward automation for the sake of convenience, rather than meaningful impact.

The UNESCO Recommendation on the Ethics of Artificial Intelligence highlights this clearly. It calls for AI systems that enhance human capabilities, not replace them. Systems that actively support inclusivity, fairness, and academic freedom.

Similarly, the OECD Principles on AI promote a governance model built around human-centred values, human rights, and democratic oversight.

Principles to anchor this:

- Augment, don’t automate: AI should lighten the load, not strip away professional judgment.

- Protect student voice and educator judgement: Keep agency in human hands.

- Prioritise well-being, equity, and creativity: Let AI be the support, not the driver.

In practice, this means choosing tools that align with your ethical stance, whether that’s banning surveillance tools, allowing assistive content generation, or designing inclusive systems for multilingual learners.

If your tools don’t reflect your values, they’ll eventually work against you.

2. AI Literacy

Effective organizational change requires broad participation across all levels, and developing AI governance systems is no exception.

Successfully navigating the rise in AI adoption demands inclusive engagement from throughout your organization to ensure both buy-in and practical implementation.

To engage everyone effectively, you must first ensure there’s at least a basic level of understanding about AI across your organization.

At this stage, assess the current understanding levels across different groups and design targeted AI literacy training to address the gaps:

- Teachers: Effective prompting, ethical use, workload reduction, assessment redesign

- Students: Bias detection, critical thinking, academic honesty

- Parents/Guardians: Understanding tools in use and data handling practices

- Administrators/IT: Risk analysis, vendor assessment, regulatory compliance

Build practical AI literacy:

Learn by doing. Experiment with different tools. Create “AI labs”. Here are proven approaches from leading institutions:

- Pilot projects in low-risk areas: Virginia Tech encourages controlled experimentation to gather feedback and refine policies iteratively

- Faculty-led AI committees: University of Michigan established committees that oversee AI literacy training and report on effective use cases

- Hands-on exploration before implementation: Johns Hopkins encourages faculty to explore and experiment with AI tools before using them in classes

- Targeted training for specific disciplines: Virginia Tech designs programs particularly for non-STEM faculty to address the “AI divide”

- Centralized resource hubs: Institutions create campus-wide centers for AI exploration and information sharing

- Traffic light systems for clear guidance: Georgia schools use simple Red/Yellow/Green categorizations to help users understand appropriate AI use

3. Intended Use and Educational Goals

Translate your institution’s vision into concrete AI applications by defining specific, educationally-grounded use cases. Here’s how leading institutions approach this:

Establish clear use boundaries: University of Michigan categorizes AI use into three levels – encouraged (brainstorming, editing, grammar checking), allowed with disclosure (content generation with clear attribution), and prohibited (impersonation, unauthorized group work assistance).

Create role-specific guidelines: Johns Hopkins provides distinct guidance for faculty (using AI for course design and adaptive feedback) versus students (verification requirements, bias awareness, transparent attribution). Georgia schools explicitly prohibit AI for high-stakes decisions like IEP goals and educator evaluations while encouraging it for lesson planning and rubric development.

Redesign assessments strategically: Rather than simply detecting AI use, institutions are rethinking assignments. University of Michigan emphasizes protecting “the cognitive dimension of learning” – ensuring AI enhances rather than replaces critical thinking processes.

Require transparent disclosure: Johns Hopkins mandates students explain their original prompts, identify incorrect AI output, and demonstrate how they revised content for accuracy. This shifts focus from prohibition to responsible collaboration.

Align with curriculum goals: All AI applications must directly support existing pedagogical objectives rather than becoming distractions from core learning outcomes.

4. AI Ethics. Institutional Moral Compass.

Having established your institutional values, built AI literacy, and defined intended uses, you now translate these foundations into an internal moral compass for ethical decision-making.

This is where many institutions struggle. They recognize AI’s power but try to regulate it through plagiarism detectors or blanket bans rather than building upon their established groundwork.

Your ethical framework should directly reflect the values and use cases you’ve already defined:

Operationalize your guiding philosophy: Transform your core values into actionable ethical principles. If you’ve established “pedagogy first” as a value, Johns Hopkins demonstrates this by requiring AI to support course goals like critical thinking rather than replace them. University of Michigan’s “cognitive dimension” protection directly implements their human-centric values.

Apply literacy to bias mitigation: Use the AI understanding you’ve built to recognize that models reflect training data biases. Your literacy training should have prepared faculty to identify these issues. Johns Hopkins requires educators to actively ensure AI-generated content covers diverse perspectives (knowledge they gained through targeted training).

Align verification with intended uses: Cornell’s approach shows how verification requirements match their defined research applications. Since you’ve already specified where AI can be used, now define the verification standards for each use case your institution has approved.

Connect attribution to transparency goals: Build on your intended use guidelines by requiring disclosure that matches your institution’s specific applications. University of Michigan’s detailed attribution requirements directly support their transparent AI integration approach.

Reinforce accountability through role-specific ethics: Your role-specific guidelines now need corresponding accountability measures, ensuring users remain professionally responsible for outputs within their defined scope.

This ethical framework transforms your foundational work into practical moral guidance.

External pressures

While your internal drivers establish the foundation for AI governance, no institution operates in isolation. External forces, from regulatory requirements to stakeholder expectations, create essential guardrails that shape your AI initiatives.

These external pressures don’t undermine your internal framework; instead, they provide the boundaries within which your values-driven approach must operate.

They ensure your AI governance remains legally compliant, financially viable, and responsive to your broader community.

5. Risks

5. Risks (Anticipating & Managing Harm, including Security)

Your internal ethical framework must now confront real-world threats that could undermine everything you’ve built. The EU AI Act’s classification of education as “high-risk” isn’t arbitrary – it reflects genuine dangers that demand systematic mitigation.

The Academic Integrity Crisis:

Traditional plagiarism detection fails with generative AI. Students can submit entirely AI-written work that passes detection tools, while others struggle to understand what constitutes “appropriate assistance.”

The solution isn’t better detection, it’s redefining what original work means in an AI-augmented world.

Hidden Biases in AI Systems:

- AI models trained on biased datasets perpetuate discrimination

- Automated grading systems may favor certain writing styles or cultural perspectives

- Recommendation algorithms can reinforce existing educational inequalities

- Facial recognition and emotion inference systems (now banned under EU regulations) pose particular risks to student privacy

The Security Minefield:

When faculty input sensitive research data into ChatGPT, they’re essentially handing intellectual property to commercial entities. Students entering personal information face similar risks.

The solution requires both technological safeguards and behavioral change through targeted training.

Cognitive Dependency: Perhaps the most insidious risk. Students who rely on AI for thinking rather than learning. Virginia Tech’s research shows this concern is driving faculty resistance, but the answer isn’t prohibition. Instead, institutions must deliberately design AI integration to enhance rather than replace critical thinking skills.

Reputational Fallout: One data breach, one biased algorithm, one academic integrity scandal can destroy years of institutional trust. Proactive risk management isn’t just operational, it’s existential for institutional credibility.

6. Stakeholders

AI governance cannot happen in isolation. The most thoughtful policies fail without genuine buy-in from the people who must live with them daily.

Students as Co-Creators: Virginia Tech discovered a significant “AI divide” between STEM and non-STEM students. Rather than designing policies for students, involve them directly through AI ambassador programs or student ethics committees.

Teacher Autonomy Within Boundaries: University of Michigan grants instructors flexibility to set their own AI policies within institutional guidelines. This respects pedagogical expertise while maintaining consistency.

Parent Partnership:

- Explain educational rationale with concrete examples

- Address privacy concerns in plain language

- Provide opt-out mechanisms where required

IT as Strategic Partner: Academic leaders often make AI decisions without understanding technical implications. Georgia’s vendor vetting process shows how technical and pedagogical considerations must merge seamlessly.

Community Dialogue: Public institutions especially need broader support. Regular communication and transparent reporting help build the social license needed for educational innovation.

Engagement isn’t a checkbox exercise. It’s ongoing conversation that continuously shapes your AI approach.

We recommend you create create a stakeholder map at this stage, plotting key groups by their influence and interest in AI initiatives. This visual guide prioritizes engagement efforts and reveals unexpected allies or resistance points.

7. Compliance (Adhering to Regulations)

Regulatory compliance represents both constraint and opportunity. Meeting legal standards builds stakeholder trust and demonstrates institutional responsibility, while violations can destroy years of relationship building.

Interconnected Pressures: Notice how compliance issues weave through your entire framework:

- Data protection satisfies both legal requirements and parent concerns about privacy.

- Bias mitigation addresses stakeholder equity demands while meeting anti-discrimination laws.

- Hallucination risks create both academic integrity challenges and potential legal liability.

These aren’t separate problems but interconnected challenges requiring coordinated responses.

Building Trust Through Compliance: When parents see rigorous FERPA/ GDPR adherence, they trust your data handling. When faculty observe accessibility compliance, they feel confident recommending tools to diverse learners. Compliance becomes a stakeholder engagement tool, not just a legal requirement.

Mapping Your Regulatory Landscape:

- Data Protection: FERPA, COPPA, state privacy laws

- Age Restrictions: Platform terms, parental consent requirements

- Intellectual Property: Copyright implications of AI training data

- Accessibility: ADA compliance for AI interfaces

- Anti-Discrimination: Civil rights protections in algorithmic decisions

At this stage, map the main regulations your AI systems must respect. Highlight both risks (potential violations) and opportunities (competitive advantage through demonstrated responsibility) for each use case you’ve defined.

8. Financial Sustainability

External budget pressures and board oversight create hard financial realities that idealistic AI plans often ignore. Your governance framework must demonstrate clear value or face inevitable budget cuts.

Conduct a comprehensive financial analysis at this stage. Map both the full cost structure and potential savings across your institution. AI procurement is just the tip of the iceberg. Factor in training, technical support, data storage, compliance auditing, and ongoing oversight.

Calculate the savings side: How much teacher time could AI free up for student interaction? What administrative tasks could be automated? University of Michigan faculty report significant time savings in lesson planning and resource creation.

Document these efficiency gains alongside direct cost reductions.

Strategic procurement essentials:

- Leverage collective purchasing power with other institutions

- Negotiate educational discounts and data portability clauses

- Evaluate total cost of ownership, not just licensing fees

- Plan for scalability to avoid costly migrations

ROI extends beyond dollars. Include qualitative benefits like improved learning outcomes, teacher satisfaction, and institutional reputation. Georgia’s vendor evaluation process demonstrates how comprehensive assessment prevents costly mistakes.

Your financial analysis should link AI spending to strategic institutional goals, showing stakeholders how investments support the mission while building competitive advantage. Boards and donors need to see both fiscal responsibility and educational impact.

External Educational Mandates

Accreditation bodies and government agencies impose non-negotiable educational standards that your AI initiatives must support, not undermine.

Now’s the time to reality-check your intended AI uses against these external requirements.

Review your use cases from step three through this compliance lens. That blue-sky brainstorming about AI applications now faces hard constraints. Can your planned AI tutoring system actually support state curriculum standards? Will AI-assisted grading meet accreditation requirements for fair assessment?

Curriculum alignment requires AI tools to enhance mandated learning objectives without replacing authentic skill development. If standards require critical thinking through written analysis, those AI writing assistants you identified need stricter boundaries than initially planned.

Assessment adaptation becomes critical when AI can complete traditional assignments.

Revisit your assessment redesign plans. Some institutions focus on process over product, requiring students to show thinking rather than just outputs.

Performance opportunities:

- Graduation rates may improve with AI-powered personalized support

- Employment outcomes could strengthen through AI literacy training

- Teacher evaluation systems must account for appropriate AI integration

Your reporting systems need to track AI impact on mandated outcomes. This compliance step transforms your intended uses from wishful thinking into accountable implementation, demonstrating that innovation serves educational standards, not just institutional convenience

Conclusion: Your AI Governance Strategy Starts Here

AI governance isn’t about perfect policies or complete certainty. It’s about building a thoughtful, adaptive framework that reflects your institution’s values while navigating real-world pressures and opportunities.

The nine elements I’ve outlined provide a roadmap, but every school and university will implement them differently based on their unique context, constraints, and goals.

To help you get started, I’ve created an AI Governance Model Canvas that distills this framework into a single-page strategic planning tool.

Whether you’re leading a faculty workshop, presenting to your board, or mapping out your institution’s AI future, this canvas provides a structured way to work through each element systematically.

It’s designed for brainstorming, collaboration, and turning complex governance concepts into actionable decisions.

The best AI governance framework is the one you actually use. Start with your values, engage your community, and build something that works for your students, your teachers, and your institution’s mission.

The technology will keep evolving, but a solid governance foundation will help you navigate whatever comes next.